It’s amazing how cranky you get when you become an old man. You become far less tolerant of the low quality half-assed content being foisted onto paying customers in a perpetual race to the bottom. There was someplace talking about their high quality content looking to hire additional writers and they offered up a link to this post as the type of high quality content they were after. Well, the first few sentences honked me off and you are getting a post about why now.

Forth is an unusual language. It is primarily an interpreter, and has a command-line interface so that it can be used in real time. But it also has the ability to take sets of code and compile it. So it is something of a hybrid.

The first statement is correct. The sentences following are where the wheels came off the cart. Look, I get it. If you are a Millenial who can’t look up from their identity theft enabling device and never knew a world which didn’t have it, you have no idea just how common interpreted languages were and still are. Especially languages which can be both interpreted and compiled.

What made Forth unusual was the fact it was a dictionary language. It came with a limited set of words in its dictionary and you were expected to extend that dictionary to suit your needs. The words in this dictionary formed the syntax of the language. You basically extended the syntax with each word added to your local/project/company dictionary.

While making the language very extensible, it also made the language isolating for developers. Someone who wrote IBM Mainframe COBOL for N number of years at one company could take a job at another company which also used IBM Mainframe COBOL and be productive in under a week. While the two companies may have written many different library routines and have completely different business applications, the syntax of the language was always the same no matter where you went. Okay, yes, every so many years there was a meeting of the standards committee and the syntax got tweaked, but few things ever got dropped from the language. When you left one Forth shop to go work for another, the bulk of what you knew stayed behind unless you were allowed to take it with you on some form of media.

My exposure to Forth was a long time ago. I was a midnight computer operator at Airfone, Inc. working my way through to a bachelors degree in computer programming. The engineers used a product called polyForth for some of the ground station systems. (Possibly all. I was really just a kid and the scope wasn’t important to me.) When I had free time a few of the engineers were kind enough to let me read their polyForth manuals and even borrow one of the Interpreters to use in the computer operations room. Since I worked directly with the ground stations collecting call records we got to know each other a little. Whenever they were rolling something new out to the ground stations one or more would be in during that evenings record collection just in case something went sideways.

I do know that this concept of a dictionary/syntax which would not be completely the same between two different companies turned me off. In school at the time I was learning DEC BASIC, DEC and IBM COBOL, Turbo PASCAL and a host of other things which were the same everywhere you went. I did not wish to be trapped. You see, I went to college during the early 1980s. This is when the first massive wave of off-shoring swept through America. When I started taking classes at the local Junior College for computer programming there were guys who had spent 20+ years working in a factory which was no longer around. All they knew was how to make product X which was now being made in Mexico, China or some other cheap labor country. When the factory left not only did their job leave, so did their self respect. They were what they did and they had to come to the reality their high school aged kids had more marketable job skills than they did after 20+ years of working. These were the smart ones who swallowed their pride and took the job training. The rest weren’t enrolled in the training, refusing to admit their skills wouldn’t get them a job. The thought of devoting my scarce free time to learn a language I couldn’t really take with me shop to shop reminded me of those factory workers and I didn’t want to be them.

Now that I’m older and have done quite a few embedded touch screen systems I understand more. I was thinking in terms of stable corporate jobs which paid well and didn’t understand the life cycle of embedded systems. Payroll and accounting systems written in COBOL will be running on large computers long after the human race ceases to exist. In truth, with all of the automated trading systems out there the stock market will probably continue trading every weekday long after the last human has expired. These large systems were the world I was pointing myself at because they would be around forever. The embedded world isn’t like that. Every product is spun up from scratch. Every product is different. Oh sure, you may use some of the same tools. My projects have all used C++ with Qt on a custom built version of Linux but that is all they really have in common. Other than something basic such as an error or message logging routine nothing really can move between projects. A pressure and leak testing device doesn’t really have anything in common with a patient vital signs monitor or a vending machine. That type of device control world is what Forth was created to serve. It wasn’t meant to be payroll and accounting or high volume transaction processing, it was meant to exist inside an embedded system and each system would start out with a very limited set of words like a child. As it grows it develops a larger vocabulary until it becomes an entity of its own with certain capabilities.

A programming language which could be both interpreted and compiled was nothing new. We had quite a few of them and the IT industry develops more of them each year. I can no longer keep track of all the interpreted languages which can be compiled. In truth there are varying definitions of “compiled.” Some languages p-compile to an interpreted language which executes within a virtual machine. It is called p-compiled because it is partially compiled. In truth it becomes a data set which gets fed into the virtual machine and that virtual machine does things so we call it a program. Java would be a widely known example of such a language. Another definition of compiled means a native binary. It runs within the operating system natively on a computer.

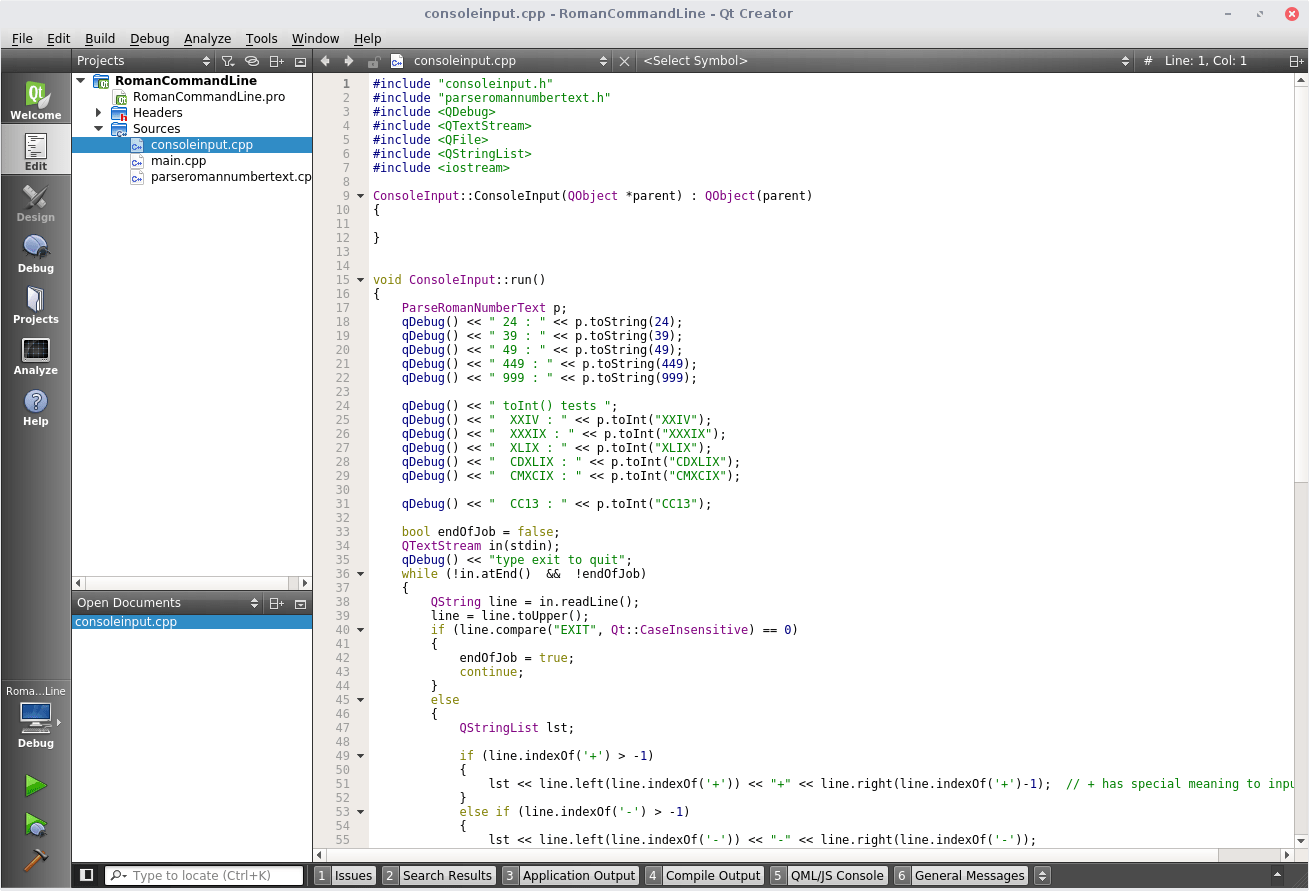

Interpreted languages were necessary in the early days of computing. We did not have fancy color coded IDEs (Integrated Development Environment) like the first image in this article. Once we graduated from punched cards and paper tape we moved to paper terminals. If you were really lucky you had a fancy one like the DEC LA-120 shown in the image. We did not have graphical debuggers. We had a line editor and the BASIC interpreter. If you wanted to see a particular part of the code you would tell it to LIST 20-50 and it would grind out lines 20 through 50. In the early days of BASIC each line had to have a line number assigned. Typically you used increments of 10 on your first draft leaving a few line numbers in case you needed to insert more code later. The interpreter understood some basic commands such as LOAD to load a source file into the interpreter, RUN to execute, SAVE, etc. We did not have oceans of memory to work with so each BASIC subroutine was very short. A good many business systems were written in various dialects of interpreted BASIC. On some of the larger computer platforms we eventually got the ability to compile this source code into a native executable. Having an interpreted environment to bang out and test a subroutine or program in and be certain it works before being added to the collection at the site was a massive boon to programmer productivity.

One would think interpreted languages would not have been a choice for computers with limited resources, but that thinking would be wrong. We did not have robust operating systems. People who needed to get to the bare metal for ultimate speed used Assembly language and people who just needed “business logic” used the interpreted languages. Data storage was expensive so only companies with massively deep pockets could afford the storage required by a million or more records and they tended to use compiled COBOL.

I do not know if there was ever a COBOL interpreter. Given the nature of the language I doubt it, but there were quite a few PC based IDEs for COBOL. The C programming language had a C interpreter product during the days of DOS. I never used it, but, I believe it was called “Instant-C.” Eventually great editors morphed into IDEs and to the products we are familiar with today but that was many years off.

Oh you always connected Millenials, we could always be connected too if we wished. All we needed was a power outlet and a standard desk phone. Hopefully you will click that image and take a good look at the machine we lugged around. Yes, you could get a carrying case with a handle. Hopefully you clicked the image to look at the page with all of the other images. I really hope you noticed the acoustic coupler modem on the side. Unless you know what the handset of a standard desk phone of the era looked like, you might not guess what those 2 round rubber looking things on the side were. Yes, we manually dialed the phone number on the phone then stuck the handset in those things so the modems could begin talking and we could begin working remotely limited only by how much paper was left on the spool on the back. There was no Internet to surf for videos on.

Realistically, this wasn’t all that long ago. The LA-12 was introduced some time prior to 1983. I actually had one of these for a while. Even after people wanted actual screens to work on, these paper terminals were still kept around. Why? They could double as printers. They had serial ports on them so just hook them up and stream your listing.

Paper terminals like the LA-120 were standard system consoles for many years after terminals and personal computers became the big sellers. The big box of continuous form provided a system log which didn’t require expensive/fragile magnetic media storage. It is because of these working conditions that Interpreted languages became popular even if they weren’t the speediest. During the earliest personal computer days there was even a spreadsheet application written in interpreted Pascal if I remember correctly. Of course, once users tried a spreadsheet application which had been compiled to a native executable, the market kind of disappeared for that product.

Eventually we graduated to the DEC VT-52 terminal. Yes, it was actually a terminal. The operating system even now gave us an editor which could use the whole 24 lines of the terminal Take a good look at the keyboard though, we did not have arrow keys. No, we didn’t have that hokey little PC trick of putting arrow images on the numeric keypad. We did have keypad navigation, but it was specific to the editor.

At any rate, this was the tools we had to work with when Forth was created. The personal computers weren’t any better. Most did not have hard drives, either cassette tape or 180K single sided 5.25 inch floppies. The interpreter helped make development across two different computer chips both faster and easier. Once development and testing was “finished” you had the option of creating a fully compiled version to load on whatever the device was during manufacturing.

Every tool has a purpose.

Not every purpose has a tool.

Roland Hughes started his IT career in the early 1980s. He quickly became a consultant and president of Logikal Solutions, a software consulting firm specializing in OpenVMS application and C++/Qt touchscreen/embedded Linux development. Early in his career he became involved in what is now called cross platform development. Given the dearth of useful books on the subject he ventured into the world of professional author in 1995 writing the first of the “Zinc It!” book series for John Gordon Burke Publisher, Inc.

A decade later he released a massive (nearly 800 pages) tome “The Minimum You Need to Know to Be an OpenVMS Application Developer” which tried to encapsulate the essential skills gained over what was nearly a 20 year career at that point. From there “The Minimum You Need to Know” book series was born.