I’ve written about Manjaro many times on this blog. Other than a lack of testing with NVidia cards/drivers, it’s hard to find fault with this distro. They are definitely doing a better job than Canonical with Ubuntu. I had made my peace with the poor performance of Ubuntu 20.04 LTS, believing it a price worth paying for the embedded systems development support. Testing out 22.04 changed all of that.

Granted my machines are a long way from bleeding edge, but an gen-4 i7 with 24GB of RAM, 1TB SSD, and 4TB WD Black ought to run fine. I have an HP Z820 with 120GB of RAM and 20 physical core with SSD, a 6TB WD Black, and a RAID array for doing Yocto/Bitbake builds. Standard OpenSource development doesn’t really stress a machine. With Ubuntu part of me was beginning to believe I would have to apply Microsoft’s second solution to everything: throw hardware at it.

Manjaro is consistently finding its way onto lists of the best Linux distros for beginners.

The Boon and the Bane

If you live long enough, everything you think is currently cool and bleeding edge in IT will go out of style, be declared extinct, and resurface under a new name as the exact same thing, once again sexy. The Mumps (now M) programming language and PICK BASIC championed NoSQL databases in the 1970s. They were declared extinct by the trade press around the 2000s. Today kids are all gaga over NoSQL.

The x86-wanna-be-a-real-computer-one-day-when-I-grow-up market has gone through the cycle many times. IBM used the 8088 instead of the 8086 in the first IBM PC because it was cheaper. It was a 16-bit processor with an 8-bit bus. The 80286 was a godawful creation that moved the IBM compatible PC world into the 16-bit bus world. Programs had to reset the CPU to get into a different addressing mode. It was horrible yet I spent my own money on and loved my AST Premium 286.

Processors continued to increase at the third digit. The 80386 and 80386/sx followed not long after by 80486 versions. You can read about the 486 wars here. Despite the reality, regular people viewed the first 486 as a 386 with built in math co-processor.

Why Memory Lane?

Because everyone paid a high price for Intel’s “backwardly compatible” decision. No matter how bleeding edge you thought your computer was, it was running code compiled for an 8088. I know, I wrote a lot of it. The Watcom C/C++ compiler was the gold standard for compiling to other target CPUs. It had glacial compile times but produced the purest binary and was the only compiler used for Novell Netware. The Netware server software targeted specific processors.

Jane and Joe consumer went into a big box software store and bought software compiled for an 8088. Manufacturers wanted their software to run on every IBM compatible PC out there so they targeted the least common denominator.

What do you think the Linux distros are doing?

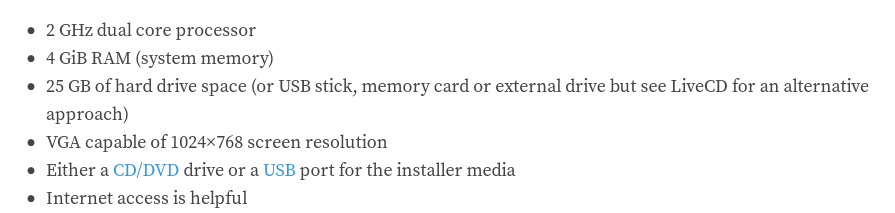

Ubuntu 20.04 system requirements found here:

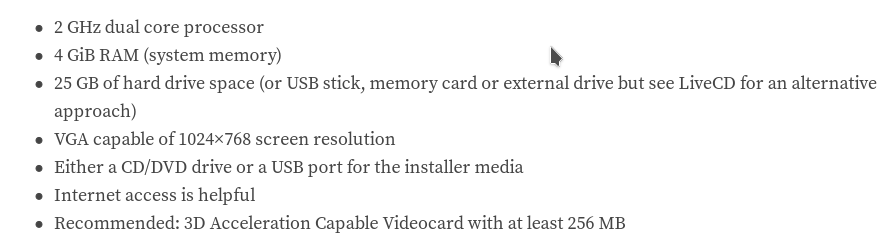

Ubuntu 22.04 system requirements found here:

You will note the additional recommendation. I personally believe this is where much of the sluggishness comes from. Well, that and “modern” browsers starting hundreds, perhaps thousands, of threads “just in case they need them.”

You can go find the minimums for your favorite RPM based distro as well.

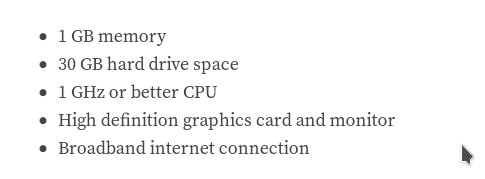

Just to confuse you, Manjaro minimum system requirements found here:

Well, shouldn’t Manjaro suck then?

Different philosophy. Arch has always been an “expert friendly” distro. Many of its denizens were viewed like those characters on the X-files that used live bugs to play chess/checkers or whatever that board game was. Arch and many of the distros based on it or at least following the religion believed you should build everything yourself.

Fine! Most people don’t know how to do that, hence the reputation. Things have gotten better over the years with the creation and policing of the Arch User Repository. Manjaro and many other distros now have built-in tools to “simply install” from AUR. You just have entertaining text shown on the screen while it pulls down everything it needs and builds it. This gives the build script the option of targeting your specific processor as its baseline. If you move from an i7 gen-4 to an i7 gen-7 you will get more benefit than you would moving binary code compiled for the first Dual Core CPU released circa 2005.

Any time you have pre-built binaries you have a least common denominator situation. It is possible to write a “Live Disc” application compiled for 80386 (or even 8088 maybe) that will “run” everywhere, have it check your CPU, and binaries for it or at least a much closer baseline.

Here’s the other reason

The Arch world is “expert friendly.” Mostly made up of grizzled old developers who got actual degrees in software development and design for low resources. Many of the volunteers for Ubuntu and other big name distros I encounter are kids still wet behind the ears. They’ve never had anything less than a high end gaming system and they can’t imagine anyone else not having their system as a baseline. They will create hundreds of threads, allocate huge buffers, rely on hundreds of CUDA cores, etc.

Oddly enough, we are right back to the 1970s where you had to cross compile Unix from source for a new processor. Then the 1980s came where many compiled and installed Linux besides DOS with LILO. Now, we have distros booting an LCD Linux, pulling down what they need, and building to target your specific processor. We simply couldn’t do that in the dual floppy and dial-up days.