We are all pack rats. “Safety copies” is how we justify duplicate files. Duplicate files used to require we kept stacks of floppy disks, remember those?

Sony used to sell shrink wrapped bricks of 100 that I used to go through every few weeks. They were much nicer than the bails of 5 1/4 disks. How many of you had one of those big wood 3-drawer floppy storage cabinets for 5 1/4 floppies? I did. Each drawer held one week’s backup. An 80MEG hard drive took about 30 floppies to back up. The stuff you really needed you kept duplicate files of on different floppies “in a safe place.”

At least when it came to the 3.5 inch floppies I got down to two single storage units. I still use these for LS-120 disks when I’m writing a new book. You can’t put a label on a thumb drive.

Today

Today we have NAS storage and 16TB is nothing. I have a drawer full of thumb drives with no idea what is on most of them. Once they get used for a project or client “they get put in a safe place” because you can’t really get a sticky note to stay on them. When I get home from a long term project I immediately upload directories from my laptop and the thumb drives I took with me. Much of it is document files that I already have on at least three machines, but something might have changed. I wouldn’t want to lose any of the new files I created!

Eventually the day comes when you are trapped in the office without anything pressing to do. You notice that your NAS is showing it is more than 80% full and you scream in your head “Enough!”

Oh, the dread of having to manually compare this stuff. Isn’t there a tool?

Enter fdupes

Be careful! Don’t just let this thing nuke whatever it considers a “dupe.”

Why?

Do you make point-in-time snapshots of working code or documents? Do you need to keep them for FDA or other regulatory reasons? Does every pimp send you the signed contract as “contract.pdf?” There are a host of reasons to still have some duplicate files. After you install this wonderful tool you need to generate a list so you can judiciously purge.

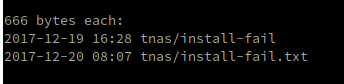

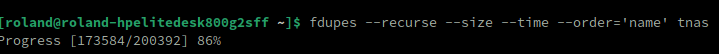

fdupes --recurse --size --time --order='name' directory-pathYou substitute the root of the directory tree you want searched. This will get you a nice list of files. Yes, it will take a bit. This performs more than a file name comparison. You still shouldn’t just blindly trust and let it delete. You might want to direct it to a file because the list can be very long.

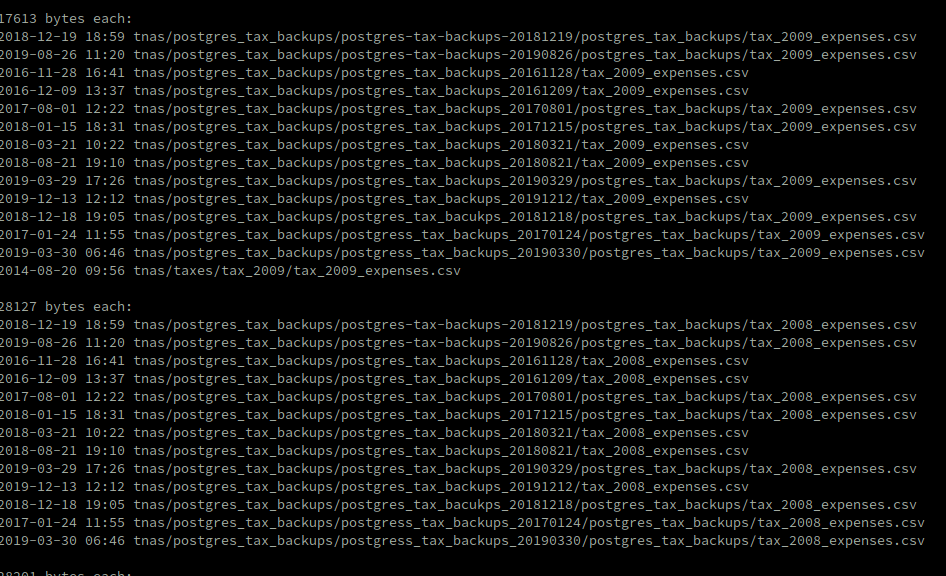

Yes, I deliberately have duplicates. They are backups of my tax database. I take various snapshots at various points in time. There are three things in this life that I fear having a problem with. Those would be “the I,” “the R,” and “the S.” Just remember that Al Capone went to prison for taxes, not being a gangster that had people killed.

You will notice each block is grouped by the file size. You get to see the file timestamp and full path of said file. If you run fdupes in this manner, you can make the decision to delete. Sometimes you want to save the stuff. Other times you want to blast an entire directory instead of individual files.

Looks like Satan was messing with me here!