I was going to title this post “The Death of Qt” but it is really all commercial event driven application frameworks. To understand this you need to take a journey with me. Most of you reading this aren’t as old as me and didn’t live through all of it.

What brought this on? Continually getting questions about why things are the way they are in Qt and application frameworks in general has been a big incentive. All of the people in qt-interest asking for alternatives to QML (living embodiment of a failed experiment if there ever was one). The ever going list of customers reaching out to me with statements like this:

We just went through our annual discussions with Qtc and have determined not to use them again on future projects.

companies that used to use Qt

Honestly, I don’t know if Qtc has figured out just how many companies that either currently pay them money or have paid them money in the past simply aren’t going to pay them one red cent going forward. It’s not a small number and there are many reasons for it. A big part of it is the “everybody must buy a license” definition of OpenSource the company seems to use.

I will state up front that I don’t bother to look under the hood of Qt source code unless I have no other choice. Personally, I find the internal coding style and packaging of all things someone might actually want to change in hidden private classes reprehensible. I only build Qt in debug and step into it when I’m certain I’ve found yet another bug.

In the Beginning

Real Computers

I started in this field in the early 1980s. Then, as now, we had real computers with real operating systems and the hokey little personal computers with toy/hobby operating systems now. Unless you were a student, when writing software for a real computer on a real operating system, you never thought about putting everything into a single executable. If you were on an IBM mainframe writing a simple report against an indexed file you might use the COBOL SORT verb and stuff the report in a single executable, but more often not. You still had to create JCL to run it so you might as well use the system SORT utility as part of the job stream.

PDP

In my PDP-11 days, we had 64K-Words. The machine had a whopping 2MEG of RAM but the OS and your application had to exist in that 64K-Words. Part of that RAM was used for I/O caching and the rest were 64K-Word chunks containing other processes that could be quickly switched to. Adding insult to injury, in the early days, we were using interpreted BASIC and later interpreted BASIC-PLUS. You split every function/subroutine into its own .BAS file. When you needed to pass parameters you made a system service call to write a big string out to CORE (or disk) then

CHAIN INV010 2100Use of CHAIN killed off your current program and all its memory. It then loaded the new .BAS file and optionally started execution at a given line number. The first thing you did was read that big string from CORE (or disk). It may sound hokey, but we ran 64 concurrent users all typing away at their terminals entering orders, printing invoices, etc. The OS provided record locking, security, and a host of other services that still don’t really exist on the x86.

By and large the operating systems were designed for time sharing. The DEC 10 and DEC 20 were true time sharing machines as were some others. What I mean by time sharing in this case is several entities went together to buy a computer. The operating systems had detailed accounting for every clock cycle, byte of RAM, and block of storage used by every process and user account. People paid for access and use.

Personal/Hobby Computers

The first computer I bought with my own money while going to school was an NCR PC-4. Dual floppies and a ghosting green monitor. I splurged to get the full 640K. (Most people tried to squeak by with 256K and some with only 512K.) It came with DOS and some form of BASIC. Crude BASIC, nothing like what we had on real computers. The one and only editor was the line editor edlin. You couldn’t do fancy things like use indexed files or write a string to CORE while CHAINing to another program. When I finally added a hard drive to my PC-4, it was a whopping 20MEG. I had to build a wood enclosure for it and the extra power supply then run the cable into my machine. I can still hear the chirping cricket of the Seagate drive.

In the Middle

DOS Got a GUI

DOS got a GUI and it was called Windows. OS/2 also came along and it didn’t have the audacity to call the GUI it had (Presentation Manager) an “operating system.” Windows was not an operating system until NT, it was just a task switching GUI layered on top of DOS. Buried in the AUTOEXEC.BAT file was one line WIN.

@echo off

SET SOUND=C:\PROGRA~1\CREATIVE\CTSND

SET BLASTER=A220 I5 D1 H5 P330 E620 T6

SET PATH=C:\Windows;C:\

LH C:\Windows\COMMAND\MSCDEX.EXE /D:123

DOSKEY

CLS

WINWhen you exited Windows you were right back at the DOS prompt.

DOS did not have multi-tasking and Windows only had task switching. There was this unfortunate class of programs called TSRs (Terminate Stay Resident). This is actually how the mouse was supported. DOS didn’t have device drivers per say. It had TSRs that could hook various BIOS interrupts to do things. This was now you supported a mouse, CD drive, etc. One of the more popular third party TSRs was Borland Sidekick.

You could define a hotkey and it would pop up when you hit it. Had a calculator, notepad, and a few other things. The computer actually branched to this executable in memory then branched back when done. We Still didn’t have anything along the lines of good indexed files, multi-tasking, or multiple applications. You could only do one thing at a time. IT students were made to take an Assembly language class so we understood this fact of life.

Because of this single core and feeble OS reality, every program had to be a single executable. Either .exe or .com. Under the DOS GUI known as Windows the DLL (Dynamic Link Library) was introduced in a desperate attempt to shrink executable size. Under DOS you had to statically link everything into that executable. Today under Linux an AppImage bundles many executables and libraries into a single runable executable.

Real Computers

Real computers had big similarities and differences at this time. I cover a broader amount of this in my latest book. One large difference for you to understand is that we had thousands of processes running under hundreds of different user identities all at the same time. They were all different executables.

I also have a not short post on here about Enterprise Class Computing.

Similarities

Recovery

IBM and DEC both had native indexed file systems that had journalling and restart capabilities. If your job fell over, as long as you were committing data every so many transactions, you could clean-up the few uncommitted and restart from just after the last commit point. If you did the work up front restarting really was that easy. Those who took shortcuts paid a heavy price in time and effort when a recovery had to occur. Crashes were rare, usually due to disk space issues, but they happened. One crash I know of happened because the UPS (Uninterruptable Power Supply) maintenance person disregarded a big warning sticker, took off a panel rather than walk around to do maintenance. He left in a body bag because the human body can’t tolerate that much voltage. The computer system didn’t like losing power either.

Batch and Print Queues

Every Midrange and Mainframe operating system on the market had batch and print queues. You could have hundreds of batch and the only real limit for print queues was the number of connections you purchased on your hardware. All kinds of security could be set on these queues so only certain users or groups of people could utilize them. Print queues could be manipulated by systems operators who had to tell the queue what form was currently mounted. The operating system would then start releasing jobs that needed that form and were in that queue. They would even notify the computer operator when each print job completed. If you needed 15 copies, you just submitted with a copy count and the print queue did it. None of this create a file of 15 copies so the printer could just stream.

Even in the late 1980s batch queues allowed you to stack and control jobs. You could submit /HOLD or /AFTER. The “after” qualifiers could be a full date and time (as long as it was a future timestamp, no submitting jobs to run after the Battle of Hastings) or it could be another job in the queue. The batch queue software in the operating system would manage all of that. You could have hundreds of batch jobs running at the same time. Each would notify the operator and optionally the end user of its completion status.

Application Control Management System

On DEC it was simply called ACMS. On IBM it is a little known capability of CICS. Everybody thinks of CICS and green screen data entry, but it also had/has application control management capabilities. I even wrote a book on ACMS.

No matter how a message came in we had both guaranteed delivery and guaranteed execution. Even in the days of TCAM and bisync modems, once the package came in, it could not be lost. Compare that with the house of cards you have in e-commerce and its abandoned shopping cart epidemic. We didn’t build a house of cards.

Restartable Units of Work

I don’t encounter anyone in the x86 world that has any concept of this topic. In our architecture planning we divided each step of a task into restartable units of work. These units were distributed to task servers running under application servers under the protective umbrella of the application control management system. You could set min and max task server counts for each name. When there was no work only the min would be kept alive. As workload increased more would be started up to the max. Because of this throttle control, you could not bury a box no matter how you tried to flood it.

If a unit of work was being processed by a task server instance that fell over, the transactions would be automatically rolled back, a new instance started, and the unit dispatched to it. After a unit had caused a manually configured number of crashes it would be placed onto an error queue for humans to look into. Nothing could be lost. All orders (or whatever) would be processed.

Differences

Networking

Before we had the Internet every vendor had its own proprietary networking technology. It was expensive and it existed for a reason other than price gouging. Token Ring was really efficient for small local networks where users didn’t try to transfer a gig at a time. (Think order/data entry.) DECNet allowed just about everything to talk to it. PCs could even use the VAX storage system as well as print queues. Naturally you had to buy additional packages/gateways/etc. to make one system talk to another.

Data

IBM used EBCDIC and the rest of the world used ASCII. Every platform had slightly different definitions for PACKED-DECIMAL so they weren’t completely compatible. COBOL was the language of business and PACKED-DECIMAL was what it used for financial calculations. This was not a tiny issue. Each OS had different headers it would write on those big reel tapes. Even if you had purchased all of the networking packages you couldn’t “just transfer” data between IBM and others.

Fake Machines vs. Clusters

This is probably one of the biggest differences. IBM of the day believed in building one massive machine and giving it an OS and firmware combination that allowed it to be configured as multiple machines. Today people would try to call it virtualization but it wasn’t. Each was physically allocated CPUs, RAM, and storage.

DEC built smaller Midrange computers. When you needed more horsepower you could add another machine and cluster them together. Clustering isn’t the B.S. definition the x86 world tries to use, calling anything with a network connection a “clustered machine.” When a node joins a cluster it becomes one big machine. Uses the same UAF (User Authorization File) as every other node. Anyone on any node can use any resource (if they have the priv). Pre-Internet I worked on a cluster with an Illinois location, another somewhere in Germany, and one more in Puerto Rico that I knew of. All of their drives and queues appeared as local resources.

The x86 mind cannot really grasp how Earth shattering this is.

Different application servers could be distributed across every node in the cluster under ACMS. You could balance load and place work close to its data. Indexed files, relational databases, and message queues all participated in a single transaction that could commit or rollback across the entire cluster.

In later years IBM got something like clustering but I don’t remember what it was and I don’t know if it only worked on the AS/400 machines.

The Invasion Years

PC makers wanted to claw their way into the data center. It started out with file servers like Netware and kept growing from there. The lies were fabricated about it being “cheaper.” It never was. If you were willing to throw software quality and reliability under the bus you could use cheaper people who had no IT education, but producing a quality solution has and always will cost more. No, that hand polished turd you put together using AWS, CloudFlare, Azure, etc. isn’t a quality solution. You’ve just gotten used the smell and the abandoned shopping cart epidemic caused primarily by random outages in the house of cards you have stacked between yourself and the end customer.

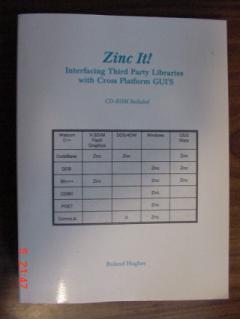

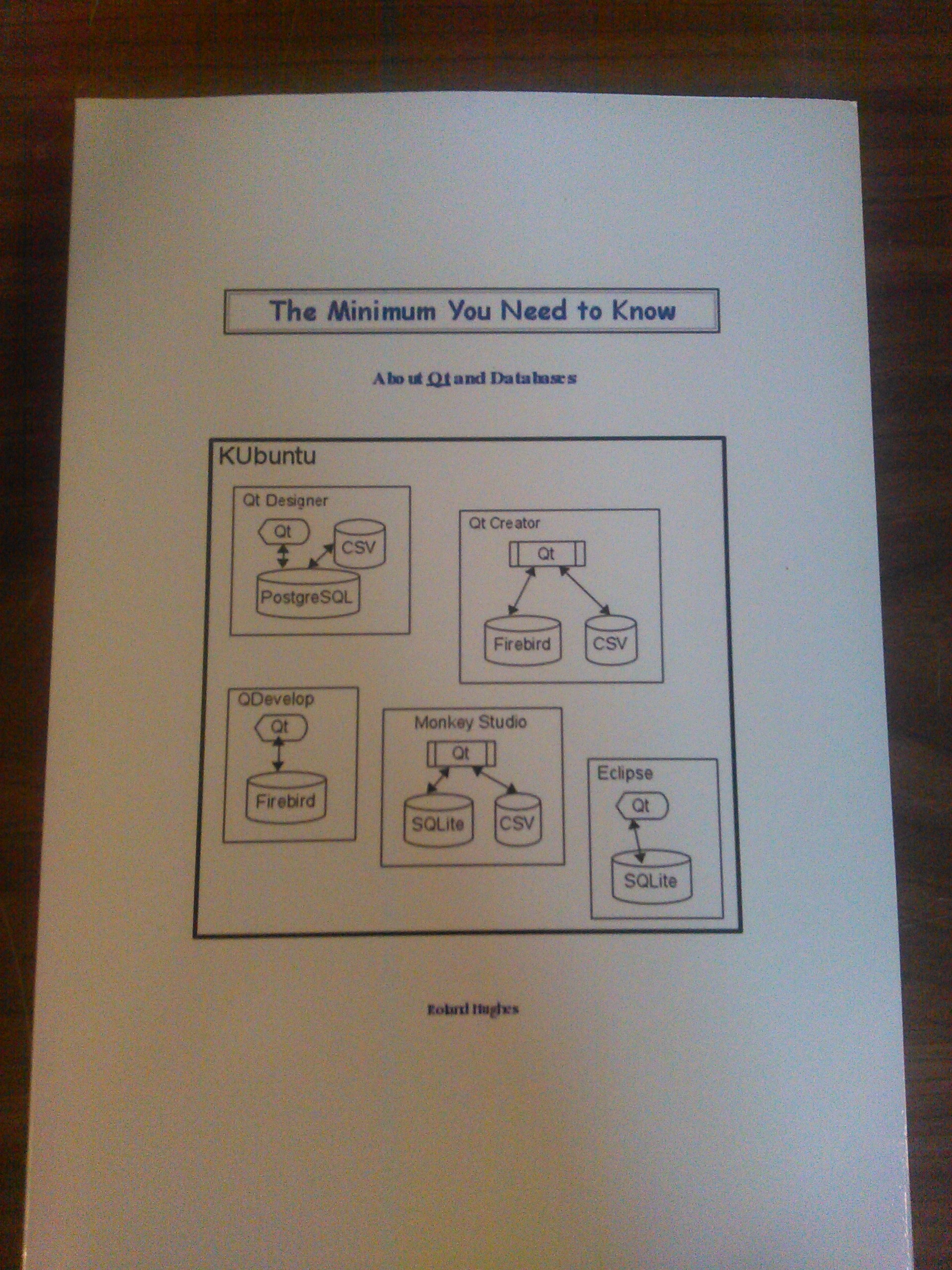

There were no end of snake oil peddlers. You really need to read the RAD (Rapid Application Development) section of The Minimum You Need to Know About the Phallus of AGILE. We went from application frameworks that were only a GUI like Zinc to full blown application generators bundling GUI, database, and report writer. Of course, you needed to purchase runtime licenses to distribute and per user licenses to run on corporate servers. I don’t think I ever encountered one that didn’t claim it could scale to enterprise size. Not one ever did this. They just changed the definition of “enterprise” to N-people in one room.

This was the era where an 80% solution was deemed “good enough.” There were a very narrow set of applications Pro-C (the C program generator not the Oracle thing), DataBoss, Lotus Approach, etc. could provide a 100% solution for. Getting the last 20% was generally either impossible or completely unmaintainable. I’ve written about this before too.

Application Frameworks Were Demanded

Keep in mind the C standard sucked and every library vendor tried to lock you into only their product. Most would point the finger at the other vendor if you couldn’t make their library work with it. Just about every library vendor had a

#define TRUE 1buried in a header file along with other common things. Some used values other than zero and one for TRUE and FALSE, instead relying on values that weren’t so common in memory. Zinc really honked off a lot of people by calling itself an Application Framework and making it a bitch to add support for anything other than a GUI. I know, I wrote the book.

Getting a serial port library to work with that was a PITA. People bought my book just to solve that problem for serial and other devices.

Of course MBAs demanded full blown application generators they could put low wage clerks in front of “so they didn’t have to wait on IT.” We will never know just how many companies went out of business because the “one computer” with the “one application” holding “all the data” died without a backup. Usually the person who developed that atrocity painted themselves into a corner they couldn’t hack their way out of and quit.

Widgets and Event Driven Programming

GUI DOS (Windows) introduced two things at the same time.

- Widgets

- Event driven programming

In true Microsoft tradition it made a dog’s breakfast of both. It later tried to “fix” things with VBx controls; enough said about those. Re-usable widgets wasn’t a bad concept. Event driven programming isn’t a bad concept . . . in theory. What got implemented was wretched.

One ring to rule them all was still the tiny x86 mindset at Microsoft. One program, one main event loop to dispatch to the rest of the application. At this point you have hamstrung your application to single-thread-y-ness. Every event must be caught and dispatched by a singular main event loop in the primary thread. Hello DOS 2.0. They tried to “fix” it with VBx controls where each control had its own event handling but they still had to get dispatches from the main event loop in the primary thread (as I remember). Developers simply got the convenience of drag and drop programming for simple applications. Take a look at some of the source code found in these places:

http://www.dcee.net/Files/Programm/Windows/

http://www.efgh.com/old_website/software.html

https://chenhuijing.com/blog/building-a-win31-app-in-2019/

INT 21h

Edit: 2023-02-15 – added this INT 21h section

Kids today don’t really understand how Intel and Microsoft screwed the pooch. They don’t understand why most everything on the x86 is viewed as a hobby rather than a real OS/platform/application. Everything on the PC was single threaded despite all of the claims of threading capabilities of the various tools, programming languages, etc. No matter how you split your application up, you had a single core CPU and you were hamstrung by INT 21h, the DOS Interrupt.

God forbid you needed to use the BIOS to update screen/video display while writing to disk data coming in via a serial port. You basically had everything tied up. “Another thread” could only do calculations. It could not perform any kind of I/O. We didn’t have these restrictions in the world of real computers with real operating systems. The hobby computer land of the x86 had them from day one. Having a main event loop that was just one gigantic switch statement was just ugly code. It didn’t impact your performance because the entire thing would be continually blocked by DOS and BIOS interrupts.

Your inbound device communication had to have interrupt handlers installed. That means just what it says. When a device either DOS or the BIOS knew about needed to say something to the CPU it would “fire” its designated (usually by DIP switch) interrupted. If your program (or a TSR) installed a handler for that interrupt the CPU would switch to execution of that short code. An IRQ could not do much because things were time sensitive. Typically a serial port IRQ handler simply grabbed the byte from the UART and stuffed it into a ring buffer in some part of shared memory. It could not do I/O as that would trigger another interrupt from within an IRQ handler.

These chips and tools weren’t designed to do real work. They could be useful to an individual, hence the name Personal Computer.

The C++ Standard Sucked

Everybody claimed object oriented programming would solve everything. We had endured C program switch statements exceeding 1000 lines writing even trivial Windows 3.x programs and we were desperate. OOP would fix everything! The Microsoft Foundation Classes would fix everything. Nope.

In its rush to protect everything the C++ programming language made sharing and cooperation nearly impossible. We had to do impossible to maintain things to make a class of one library a friend of a class from a different library just so it could execute a method in that class. Source code piracy from commercial libraries was rampant because they locked shit down and we need that method of that class to be accessible. Of course once you stole that code and stuck it in your own class it never got any vendor fixes.

Enter Qt

Signals and Slots brought an end to vicious blatant code piracy just so we could execute a method. Yes, pre-processor munging of source files isn’t pretty and could lead to lots of stale stuff laying around, but you held your nose and used it. For the first time a class you wrote could have a method executed by the vendor of another library without having to be butchered into being a friend. This was a watershed moment!

Sadly, One ring to rule them all was still the design paradigm. Qt perpetuated single-thread-y-ness to all platforms, not just Windows. You still had to have a primary thread that contained the main event loop. All events had to go through there . . . sort-of. A QDialog class could have its own event loop despite the wails of angst from the C++/Qt purists. It was and still is decried as a broken model. No, the broken model was demanding a single event loop in the first place.

The OpenSource license was pretty liberal and many products were created with it. Tons of stuff got added to the library. Most of Qt today no single developer will ever use. There are too many modules that do too many things you don’t even know about.

Garbage Collection

The other big game changer Qt brought to the table was garbage collection. Every properly parented object would be deleted with the parent. When the application exited all of those objects would be swept up. You could still have memory leaks but junior developers had a much higher chance of creating usable code when the library was there to change the diapers.

Qt was not unique here. Many C++ UI and application framework libraries went down this path, even Zinc. Each had varying degrees of success. Garbage collection was sort-of the icing on the cake after signals and slots.

The downside of library provided garbage collection is it almost certainly requires some level of single-thread-y-ness. You have to have a registry of objects which is updated via every object creation. This has to be accessible via the primary thread. When the primary event loop exits, the code looks at this table and nukes everything.

Copy on Write

Copy on Write (CoW) is banned by C++11 and later language standards. It’s a mainstay of Qt. The CopperSpice port of Qt 4.8 removed CoW. When CoW is in use you cannot have exceptions. On slow processors like many low powered embedded systems or even the 486/SX desktop, CoW is a huge game changer. You can have sloppy code that needlessly creates hundreds of copies of objects passing by value and pay almost no penalty for it. Those other copies aren’t actually made, just kind of a ghostly image that points back to the actual data. Until one or more instance actually wrote to or changed the data, everything just refers to the original instance.

When an exception is thrown you don’t have a local copy for error messages and God only knows what you still have access to somewhere else in the application. There are instances of really bad code that abuses temporary variables excessively where CoW makes it a non-issue. Read this post for more on that.

Sad Exit of OS/2

When OS/2 first came out, it rather sucked. IBM let Microsoft write too much code and Microsoft had no intention of letting IBM own the dominant PC operating system. Despite the evil intent of Microsoft, once IBM got rid of almost all the Microsoft code (except for that Windows compatibility stuff), OS/2 was a way better platform. It had far better memory management and network capability. If you were part of DevCon (or whatever it was called at the time) you had MQSeries, PL/1, REXX, and a host of other mainframe tools. There was little doubt we were going to get a CICS-based application control manager. Qt3 was used a lot on this platform.

Naturally IBM killed it rather than flexing their muscle to crush Microsoft. Single-thread-y-ness reigned.

Companies Have Stopped Paying for a Bottle Neck

I never thought I would hear myself say this, but “Thank God for just a GUI library!” Businesses have stopped paying money for everything-including-the-kitchen-sink application frameworks that come with single-thread-y-ness baked in. They will use some of the open source stuff, but now they want people who actually went to college for IT. Not just a college, but a good school. They fire developers who take a One Ring to Rule Them All design approach.

We are also seeing a huge surge in Immediate Mode GUI libraries. One everyone seems to get their feet wet with is Nuklear. Then they move on to NanoGUI, Elements, and others. Those who can’t quite shake Qt-like all things to everyone move to CopperSpice and wxWidgets. These last two come with the single-thread-y-ness main event loop issue, but they are actually OpenSource. You can use them in your commercial products free of charge.

Declarative UIs

Another big trend in today’s development is Declarative UI code. Qtc stuck with QML which is a hand polished turd I’ve written about before. You can’t add multiple engines/runtime environments to a single executable and have anything that is either stable or reliable. You can’t be mixing and matching scripted language that gets at least partially interpreted at runtime with a compiled language like C or C++. Read the above link for why.

Declaratives have gone a few different ways since the debacle of QML. The first is “you code it full compile.” Here is a snipped from an Elements example program.

auto make_buttons(view& view_)

{

auto mbutton = button("Momentary Button");

auto tbutton = toggle_button("Toggle Button", 1.0, bred);

auto lbutton = share(latching_button("Latching Button", 1.0, bgreen));

auto reset = button("Clear Latch", icons::lock_open, 1.0, bblue);

auto note = button(icons::cog, "Setup", 1.0, brblue);

auto prog_bar = share(progress_bar(rbox(colors::black), rbox(pgold)));

auto prog_advance = button("Advance Progress Bar");

reset.on_click =

[lbutton, &view_](bool) mutable

{

lbutton->value(0);

view_.refresh(*lbutton);

};

prog_advance.on_click =

[prog_bar, &view_](bool) mutable

{

auto val = prog_bar->value();

if (val > 0.9)

prog_bar->value(0.0);

else

prog_bar->value(val + 0.125);

view_.refresh(*prog_bar);

};

return

margin({ 20, 0, 20, 20 },

vtile(

top_margin(20, mbutton),

top_margin(20, tbutton),

top_margin(20, hold(lbutton)),

top_margin(20, reset),

top_margin(20, note),

top_margin(20, vsize(25, hold(prog_bar))),

top_margin(20, prog_advance)

)

);

}You should also check out LVGL because it is actively supported by industry deep pockets. I’ve not yet used it but the example code isn’t that much different than the above.

/**

* A slider sends a message on value change and a label display's that value

*/

void lv_example_msg_1(void)

{

/*Create a slider in the center of the display*/

lv_obj_t * slider = lv_slider_create(lv_scr_act());

lv_obj_center(slider);

lv_obj_add_event_cb(slider, slider_event_cb, LV_EVENT_VALUE_CHANGED, NULL);

/*Create a label below the slider*/

lv_obj_t * label = lv_label_create(lv_scr_act());

lv_obj_add_event_cb(label, label_event_cb, LV_EVENT_MSG_RECEIVED, NULL);

lv_label_set_text(label, "0%");

lv_obj_align(label, LV_ALIGN_CENTER, 0, 30);

/*Subscribe the label to a message. Also use the user_data to set a format string here.*/

lv_msg_subscribe_obj(MSG_NEW_TEMPERATURE, label, "%d °C");

}I haven’t used LVGL but there is one line which catches my attention.

A fully portable C (C++ compatible) library with no external dependencies.

LVGL features list

Many of the newer libraries are going this route. Choosing to not build on any existing underlying library.

The second is much like Altia (which I have not used on a commercial project) have done. You use a GUI tool to create some sexy looking UI, save the file, then have C/C++ code generated from that. Unlike the RAD tools of the early 1990s, this class of tool isn’t trying to generate code for an entire application, just the UI. You don’t have to hack the code to get that last 10% because all of the other tools you use to do the back end are your own. You aren’t hamstrung by a built in report writer, database, etc.

Oddity to Know About

I do not have links to them now, but there are some which use a 4GL type language to declare the UI. This is then fed through a compiler/translator to produce C/C++. If you’ve used Qt, think a .ui file you can edit without an XML editor that gets translated into actual code and compiled. There aren’t many of these and I don’t know if they will survive. You might also think of it as QML sans any functional code that gets translated to C/C++.

Good Design – Everything is a CICS Application

Good design doesn’t try and wedge everything into One Ring to Rule Them All. Here is basically how ever good embedded or distributed system is designed.

Embedded systems have an additional hardware watchdog. What is pictured here is the software watchdog. Every one of these is its own process with its own PID. The watchdog starts then starts all of the others. Periodically it sends a heartbeat directly to each process. If N heartbeat responses are missed it logs the missing process and starts another. It gets a little kinky when it is your publish subscribe message queue that died since all of the other processes must be notified and resubscribe but they are all running in their own little space. The operating system can choose to put each of them in there own processor core.

No matter how you thread in your C/C++ One Ring to Rule Them All application, the C/C++ compiler cannot help you split it across core. Too much has to happen in that primary thread. You are always going to be hamstrung. Despite the purists decrying its use, you will find oodles of Qt applications calling qApp->processEvents() from a threaded process. Had to do it a lot in the code I wrote for this book.

When you are trying to create a lot of objects from a database cursor you will find they don’t fully create until the main event loop does something for them.

Summary

Today’s successful embedded systems and other applications aren’t using One Ring to Rule Them All type application frameworks and they’ve stopped paying for the commercial versions of said tools. We are now adopting the good architectural principals real computers with real operating systems had in the 1980s. One task requires one process/server. Run everything under an application control management system. For now, that’s our watchdog process. We are back to wanting specialized libraries that don’t walk on each other.

When you create a serial communication process you can fully test it without any GUI if it connects to the publish subscribe message queue and has a messaging API. You tell it how to configure and open via a message then subscribe the the messages it sends. All clean and self-contained. This works for any device or external entity, even a relational or NoSQL database.

Never use a library that has to have every row from a cursor loaded into a software table for display. Today’s databases can give you 2TB in a single result set like it was nothing. You don’t have that much RAM.

You can still use things like CopperSpice, wxWidgets, etc. but you cannot continue trying to stuff everything into one binary. We simply are at a stage where no company can justify paying huge dollars for a kitchen sink framework with a bug database the size of a sky scraper.